26 May 2015 by Remco Bouckaert

The main improvement is in BEAUti fixes. There are a few bugs, especially with cloning and linking/unlinkig partitions that are fixed. The templates for tree priors have been reorganised, since they some times interferred.

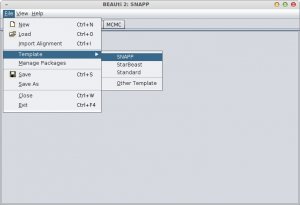

The File-menu in BEAUti is reorganised so it is easier to access files from packages. On the Mac, packages are installed in a rather hard to find folder. With the new menu, it is easy to select a working directory, then import an alignment or open an example file from the package.

The File menu has entries for creating new partitions directly, which saves one click every time an alignment is imported.

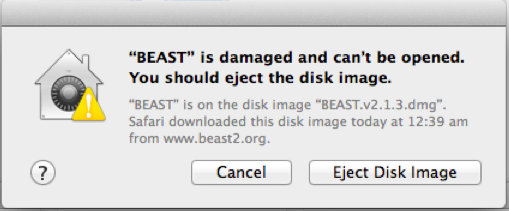

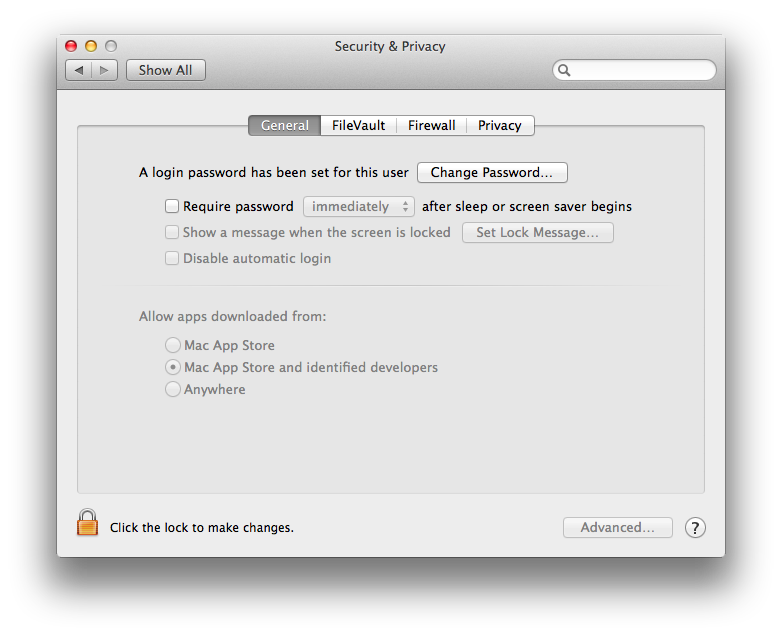

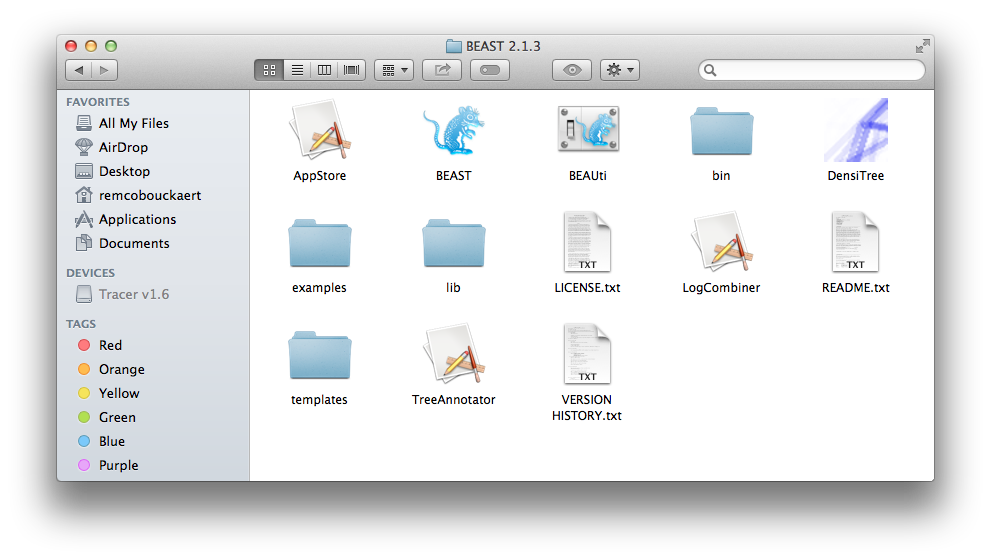

The build scripts have been updated so that each applications automatically loads beast.jar instead of having the whole file duplicated in each application, giving smaller footprint for Mac and Windows. This also makes it easier to update using the latest development build; just replace the beast.jar file with the one from here and you are running the development version.

There are a few bug-fixes, in particual, issues with synchronisation affecting ThreadedTreeLikelihood from the BEASTLabs package, a fix in EigenDecomposition affecting asymmetric rate matrices (which caused problems with ancestral reconstruction from the BEAST-CLASSIC package).

Furthre, the code is updated such that dependency on taxon order is reduced, making multiple partition handling more robust

The LogCombiner command line interface is improved so it can take multipe log files as argument, so you can use wildcards to specify multiple files, like so:

logcombiner -log beast.*.log

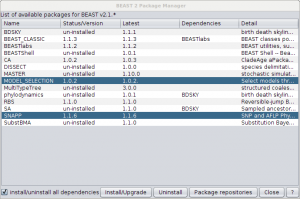

This version can keep running v2.2 and its packages alongside v2.3, so no need to delete old packages any more.

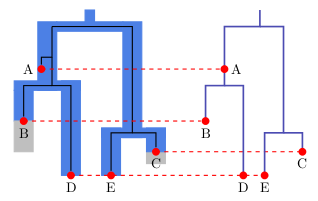

DensiTree is updated to v2.2.2.

Package added since the last udpate on this blog

There are now 20 packages, many of them added since the last update.

MGSM: multi-gamma site model

Unlike the standard gamma rate heterogeneity model, which uses a single shape parameter, the multi-gamma site model allows the shape parameter to vary over branches. This leads often to better model fit and can result in significantly different divergence time estimates. Preprint on bioRxiv.

Comes with BEAUti support.

Spherial geography

Phyleographical model that assumes heterogeneious diffusion on a sphere, which for large areas can give significantly different results than continuous geography by diffusion on a plane. For a tutorial see here. Preprint on bioRxiv.

Comes with BEAUti support.

bModelTest

A package for nucleotide models that uses reversible jump between a number of substitution models, with and without gamma rate heterogeneity and with and without proportion invariant sites. So, these parts of the model will be estimated during the MCMC run and do not need to be specified beforehand any more. Preprint on bioRxiv.

Comes with BEAUti support.

Morphological-models

Implements the LewisMK model for morphological data, see morphological models. Deals with ambiguities and nexus files for morpholocial data.

Comes with BEAUti support.

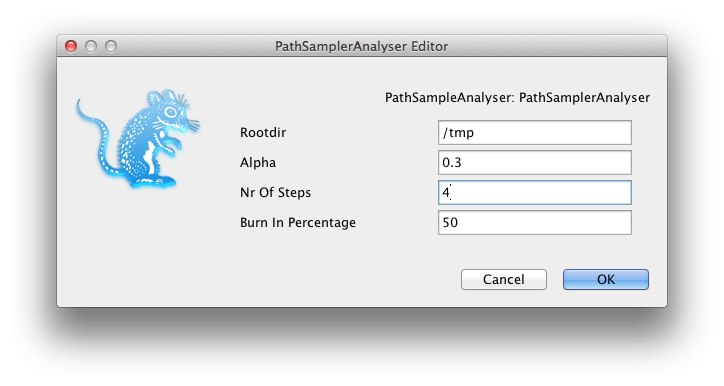

Sampled ancestors

Described previously.

The coalescent SIR model

Described previously.

Metroplolis coupled MCMC

Aka MCMCMC or MC3. More here.

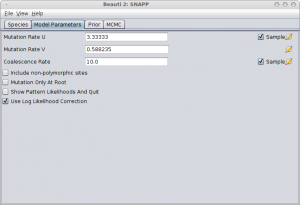

Species delimitation with DISSECT and STACEY

Methods for species delimitation based on the multi species coalescent, so this is useful for data that could be analysed using *BEAST. More on this soon.

Bayesian structured coalescent approximation with BASTA

Approximation of the structured coalescent which allows a large number of demes than the pure structured coalescent as for example implemented in the MultiTypeTree package.

More tutorials

Check out the list of tutorials, and howtos on the wiki.